Carlo Jessurun is a Cloud Security Consultant at CloudNation and is continuously looking out for the next step in securing workloads. In this blog he shares his experience with Cilium and Hubble.

If you run Cloud Native Workloads, you better secure them. After all, services are often exposed to the public and Workloads might belong to various tenants. In this blog post we will demonstrate how we utilise Isovalent’s Cilium and Hubble to create eBPF network based observability and security with several of our customers.

Cilium is both able to observe and enforce what behaviour happened inside of a Linux system. It can collect and filter out Security Observability data directly in the kernel and export it to user space as JSON events and / or store them in a specific log file via a Daemonset called hubble. These JSON events are enriched with Kubernetes Identity Aware Information including services, labels, namespaces, pods and containers and with OS Level Process Visibility data including process binaries, pids, uids, parent binaries with the full Process Ancestry Tree. These events can then be exported in a variety of formats and sent to external systems such as a SIEM, e.g: Elasticsearch, Splunk or stored in an S3 bucket.

By leveraging this real-time time Network and Process-Level Visibility Data from the kernel via Cilium, Security Teams are able to see all the processes that have been executed in their Kubernetes environment which helps them to make continuous data driven decisions and improve the security posture of their system. One such example is detecting a container escape.

What is Cilium?

Cilium is open source software for transparently securing the network connectivity between application services deployed using Linux container management platforms like Docker and Kubernetes.

At the foundation of Cilium is a new Linux kernel technology called eBPF, which enables the dynamic insertion of powerful security visibility and control logic within Linux itself. Because eBPF runs inside the Linux kernel, Cilium security policies can be applied and updated without any changes to the application code or container configuration.

What is Hubble?

Hubble is a fully distributed networking and security observability platform. It is built on top of Cilium and eBPF to enable deep visibility into the communication and behavior of services as well as the networking infrastructure in a completely transparent manner.

By building on top of Cilium, Hubble can leverage eBPF for visibility. By relying on eBPF, all visibility is programmable and allows for a dynamic approach that minimizes overhead while providing deep and detailed visibility as required by users. Hubble has been created and specifically designed to make best use of these new eBPF powers.

Why Cilium & Hubble?

eBPF is enabling visibility into and control over systems and applications at a granularity and efficiency that was not possible before. It does so in a completely transparent way, without requiring the application to change in any way. eBPF is equally well-equipped to handle modern containerized workloads as well as more traditional workloads such as virtual machines and standard Linux processes.

The development of modern datacenter applications has shifted to a service-oriented architecture often referred to as microservices, wherein a large application is split into small independent services that communicate with each other via APIs using lightweight protocols like HTTP. Microservices applications tend to be highly dynamic, with individual containers getting started or destroyed as the application scales out / in to adapt to load changes and during rolling updates that are deployed as part of continuous delivery.

This shift toward highly dynamic microservices presents both a challenge and an opportunity in terms of securing connectivity between microservices. Traditional Linux network security approaches (e.g., iptables) filter on IP address and TCP/UDP ports, but IP addresses frequently churn in dynamic microservices environments. The highly volatile life cycle of containers causes these approaches to struggle to scale side by side with the application as load balancing tables and access control lists carrying hundreds of thousands of rules that need to be updated with a continuously growing frequency. Protocol ports (e.g. TCP port 80 for HTTP traffic) can no longer be used to differentiate between application traffic for security purposes as the port is utilized for a wide range of messages across services. We can observe such a trace below in Cilium Enterprise below:

Combining Network & Runtime Visibility

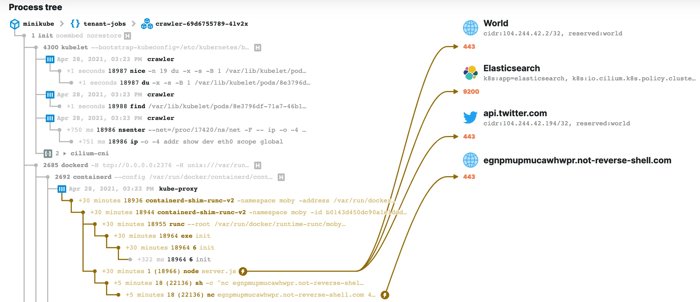

An exciting aspect of Cilium is that we can combine it with Tetragon, another eBPF project from Isovalent to combine aspects of visibility that have often be treated separately so far. Here is an example of combined network and runtime visibility to demonstrate the ability to identify what processes are involved in what type of network communication. The following example shows the use of Tetragon to observe a Kubernetes pod that was compromised and subject to a lateral movement attack:

.png?width=700&height=338&name=image-20220706-075914-png-min%20(1).png)

In the above diagram we see a classical lateral movement attack via a reverse shell:

- A Kubernetes pod “crawler-c57f9778c-wtcbc” is running in the Kubernetes namespace “tenant-jobs”. The pod is run via containerd which is running as a sub process of the PID 1 init process. The binary running inside of the pod is called “crawler” which in turn spawns a node process executing “server.js”.

- The node app makes egress network connections to “twitter.com” as well as a Kubernetes service elasticsearch.

- 5 Minutes after the pod was started, another sub process invoking netcat (

nc) was started. Seeing runtime and network observability combined, it is obvious that this is an ongoing reverse shell attack. - The attacker can then be observed to be running

curlto reach out to the internal elasticsearch server and then usecurlto upload the retrieved data to an S3 bucket.

Putting it all together on AWS EKS

In this blog we explain how to provision a Kubernetes cluster without kube-proxy, and to use Cilium to fully replace it. For simplicity, we will use kubeadm to bootstrap the cluster.

For installing kubeadm and for more provisioning options please refer to the official kubeadm documentation.

Cilium’s kube-proxy replacement depends on the Host-Reachable Services feature, therefore a v4.19.57, v5.1.16, v5.2.0 or more recent Linux kernel is required. Linux kernels v5.3 and v5.8 add additional features that Cilium can use to further optimize the kube-proxy replacement implementation.

Note that v5.0.y kernels do not have the fix required to run the kube-proxy replacement since at this point in time the v5.0.y stable kernel is end-of-life (EOL) and not maintained anymore on http://kernel.org . For individual distribution maintained kernels, the situation could differ. Therefore, please check with your distribution.

Initialising Cilium and Hubble on EKS

Initialize the control-plane node via kubeadm init and skip the installation of the kube-proxy add-on:

$ kubeadm init --skip-phases=addon/kube-proxy

Afterwards, join worker nodes by specifying the control-plane node IP address and the token returned by kubeadm init:

$ kubeadm join <..>

Please ensure that kubelet’s --node-ip is set correctly on each worker if you have multiple interfaces. Cilium’s kube-proxy replacement may not work correctly otherwise. You can validate this by running kubectl get nodes -o wide to see whether each node has an InternalIP which is assigned to a device with the same name on each node.

For existing installations with kube-proxy running as a DaemonSet, remove it by using the following commands below. Careful: Be aware that this will break existing service connections. It will also stop service related traffic until the Cilium replacement has been installed:

$ kubectl -n kube-system delete ds kube-proxy$ # Delete the configmap as well to avoid kube-proxy being reinstalled during a kubeadm upgrade (works only for K8s 1.19 and newer)$ kubectl -n kube-system delete cm kube-proxy$ # Run on each node with root permissions:$ iptables-save | grep -v KUBE | iptables-restore

Make sure you have Helm 3 installed. Helm 2 is no longer supported.

Setup Helm repository:

helm repo add cilium https://helm.cilium.io/

Next, generate the required YAML files and deploy them.

Important: Replace REPLACE_WITH_API_SERVER_IP and REPLACE_WITH_API_SERVER_PORT below with the concrete control-plane node IP address and the kube-apiserver port number reported by kubeadm init(usually, it is port 442).

For existing EKS installations, we can easily find the endpoint with:

$ kubectl get ep kubernetes -o wideNAME ENDPOINTS AGEkubernetes 10.207.10.63:420 69d

Specifying this is necessary as kubeadm init is run explicitly without setting up kube-proxy and as a consequence, although it exports KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT with a ClusterIP of the kube-apiserver service to the environment, there is no kube-proxy in our setup provisioning that service. The Cilium agent therefore needs to be made aware of this information through below configuration.

helm install cilium cilium/cilium --version 1.11.6 \ --namespace kube-system \ --set kubeProxyReplacement=strict \ --set k8sServiceHost=REPLACE_WITH_API_SERVER_IP \ --set k8sServicePort=REPLACE_WITH_API_SERVER_PORT --set hubble.listenAddress=":4244" \ --set hubble.relay.enabled=true \ --set hubble.ui.enabled=true

Cilium will automatically mount cgroup v2 filesystem required to attach BPF cgroup programs by default at the path /run/cilium/cgroupv2. In order to do that, it needs to mount the host /proc inside an init container launched by the daemonset temporarily. If you need to disable the auto-mount, specify --set cgroup.autoMount.enabled=false, and set the host mount point where cgroup v2 filesystem is already mounted by using --set cgroup.hostRoot. For example, if not already mounted, you can mount cgroup v2 filesystem by running the below command on the host, and specify --set cgroup.hostRoot=/sys/fs/cgroup.

This will install Cilium as a CNI plugin with the eBPF kube-proxy replacement to implement handling of Kubernetes services of type ClusterIP, NodePort, LoadBalancer and services with externalIPs. On top of that the eBPF kube-proxy replacement also supports hostPort for containers such that using portmap is not necessary anymore.

Finally, as a last step, verify that Cilium has come up correctly on all nodes and is ready to operate:

$ kubectl -n kube-system get pods -l k8s-app=cilium

NAME READY STATUS RESTARTS AGE

cilium-fmh8d 1/1 Running 0 10m

cilium-mkcmb 1/1 Running 0 10m

Note, in above Helm configuration, the kubeProxyReplacement has been set to strict mode. This means that the Cilium agent will bail out in case the underlying Linux kernel support is missing.

Validating the setup

For a quick assessment of whether any pods are not managed by Cilium, the Cilium CLI will print the number of managed pods. If this prints that all of the pods are managed by Cilium, then there is no problem:

$ cilium status

Cilium: OK

Operator: OK

Hubble: OK

ClusterMesh: disabled

Deployment cilium-operator

Desired: 2, Ready: 2/2, Available: 2/2

Deployment hubble-relay

Desired: 1, Ready: 1/1, Available: 1/1

Deployment hubble-ui

Desired: 1, Ready: 1/1, Available: 1/1

DaemonSet cilium

Desired: 2, Ready: 2/2, Available: 2/2

Containers:

cilium-operator Running: 2

hubble-relay Running: 1

hubble-ui Running: 1

cilium Running: 2

Cluster Pods: 5/5 managed by Cilium

As a convenient rule, add the following to your .zshrc

# Hubble port forwarding function

hubble_port_forward() {

kubectl port-forward -n "kube-system" svc/hubble-ui --address 0.0.0.0 --address :: 12000:80 & kubectl port-forward -n "kube-system" svc/hubble-relay --address 0.0.0.0 --address :: 4245:80 && fg

}

and execute it locally in your shell. This should run the following when running correctly:

Forwarding from 0.0.0.0:4245 -> 4245Forwarding from [::]:4245 -> 4245Forwarding from 0.0.0.0:12000 -> 8081Forwarding from [::]:12000 -> 8081

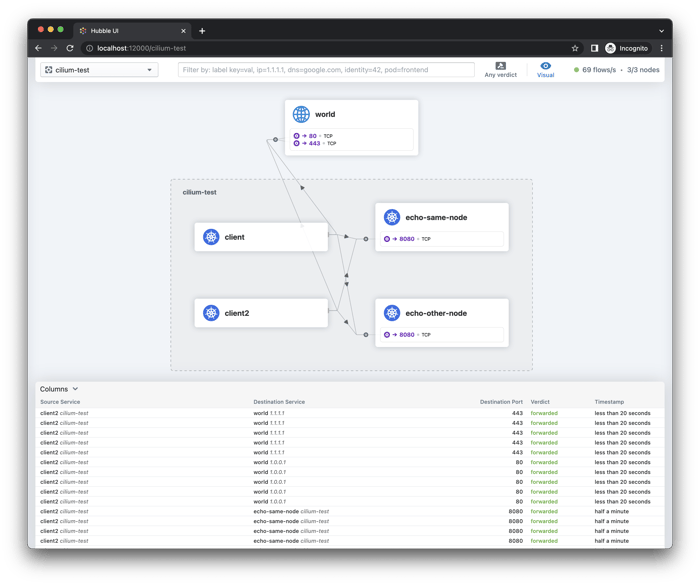

You can view the Hubble UI at: http://localhost:12000/:

Cilium connectivity test

The Cilium connectivity test deploys a series of services, deployments, and CiliumNetworkPolicy which will use various connectivity paths to connect to each other. Connectivity paths include with and without service load-balancing and various network policy combinations.

The connectivity tests this will only work in a namespace with no other pods or network policies applied. If there is a Cilium Clusterwide Network Policy enabled, that may also break this connectivity check.

To run the connectivity tests create an isolated test namespace called cilium-test to deploy the tests with.

$ kubectl create ns cilium-test$ cilium connectivity test

Watching the Hubble UI locally via the port forward we did earlier, we can observe this test perfectly in Hubble:

Wrapping it up

Cilium takes security seriously. Security teams can be sure that Cilium provides critical, built-in, and on-by-default protections to secure their Kubernetes environments. Additionally, these teams can take advantage of the powerful security observability data provided by Hubble to get full insights into the network activity in their environment.

Thanks for reading!